In an era characterized by rapid technological progress, a daunting challenge looms before us: the proliferation of deepfakes. These artificial and manipulated media productions, harnessing the continually advancing potential of artificial intelligence and machine learning, pose a substantial cybersecurity risk. In a concerted effort to tackle this menace head-on, the United States' National Security Agency (NSA), in partnership with the Federal Bureau of Investigation (FBI) and the Cybersecurity and Infrastructure Security Agency (CISA), has issued a vital resource known as the "Contextualizing Deepfake Threats to Organizations" Cybersecurity Information Sheet (CSI).

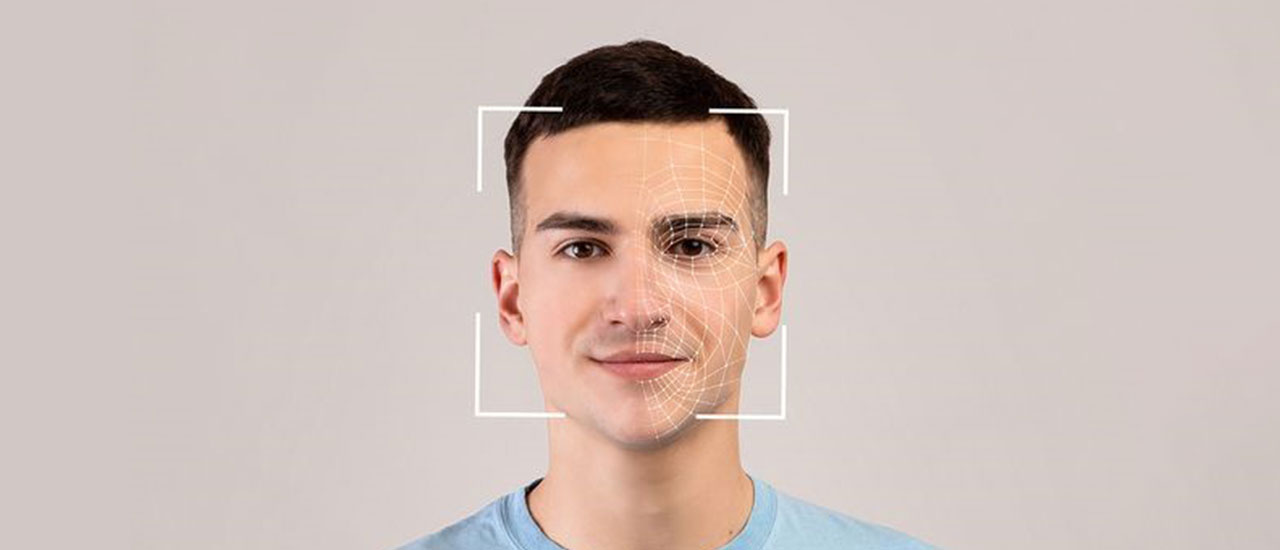

Deepfakes represent a transformative shift in media manipulation, encompassing multimedia content synthetically generated or manipulated using advanced machine learning techniques. These technologies enable malicious actors to craft convincing replicas of real individuals engaging in actions or making statements they never did. Beyond deepfakes, these manipulations go by names like Shallow/Cheap Fakes, Generative AI, and Computer Generated Imagery (CGI).

Candice Rockell Gerstner, an NSA Applied Research Mathematician specializing in Multimedia Forensics, underscores the gravity of the situation: "The tools and techniques for manipulating authentic multimedia are not new, but the ease and scale with which cyber actors are using these techniques are. This creates a new set of challenges to national security." Organizations must equip themselves to recognize deepfake techniques and have comprehensive response plans in place. The CSI outlines strategies for organizations to mitigate the deepfake threat:

- Real-time Verification Capabilities: Implement technologies for realtime verification of multimedia content to distinguish authenticity.

- Passive Detection Techniques: Employ passive techniques to proactively detect deepfakes before they cause harm.

- Protection of High-Priority Officers and Communications: Prioritize safeguarding high-ranking officials and their communication channels, often primary targets of deepfake attacks.

- Minimizing Impact: The guidance emphasizes information sharing, planning, rehearsing responses, and personnel training to mitigate deepfake impact.

Deepfakes extend beyond cybersecurity, with implications including undermining brands, impersonating leaders, enabling fraudulent communications, and inciting public unrest through the spread of false information. Technological advances in computational power and deep learning have made mass production of fake media easier and more affordable, even for those with minimal technical skills. Many deep learning-based algorithms are readily available on open-source platforms, posing a significant national security risk.

The joint effort by the NSA, FBI, and CISA in releasing the CSI is a pivotal step in addressing the deepfake threat. It equips organizations with the tools and knowledge needed to defend against evolving threats. Security professionals across sectors should heed this guidance, implementing recommended strategies to protect their organizations from deepfake influence, preserving national security and the integrity of the information landscape in a time when reality and fiction blur.